Semantic Reasoning with Structural Features in 2D Robot Grid-Maps

Author: Gabriele Firriolo

Introduction & Research Goal

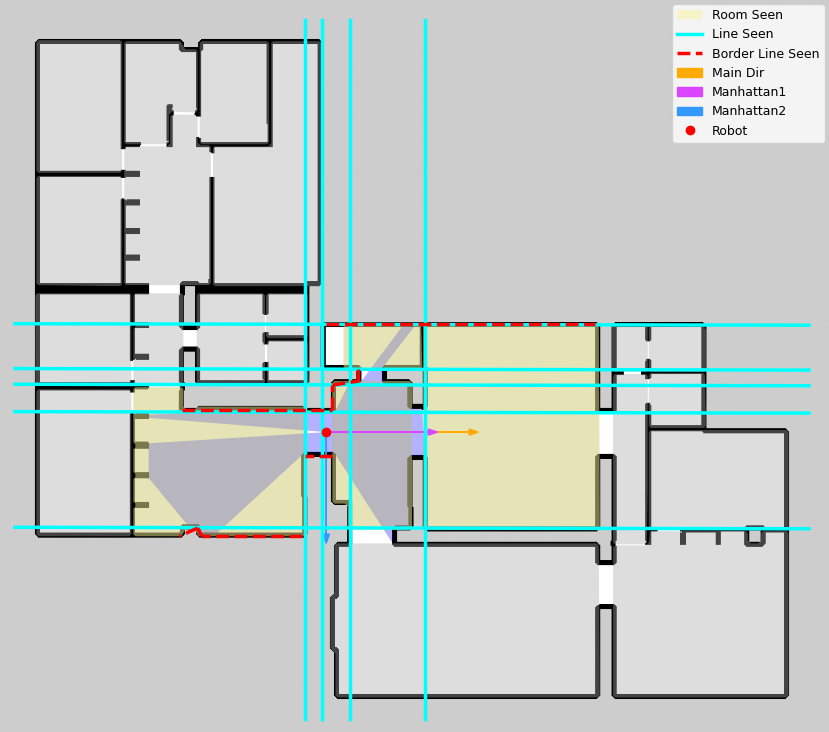

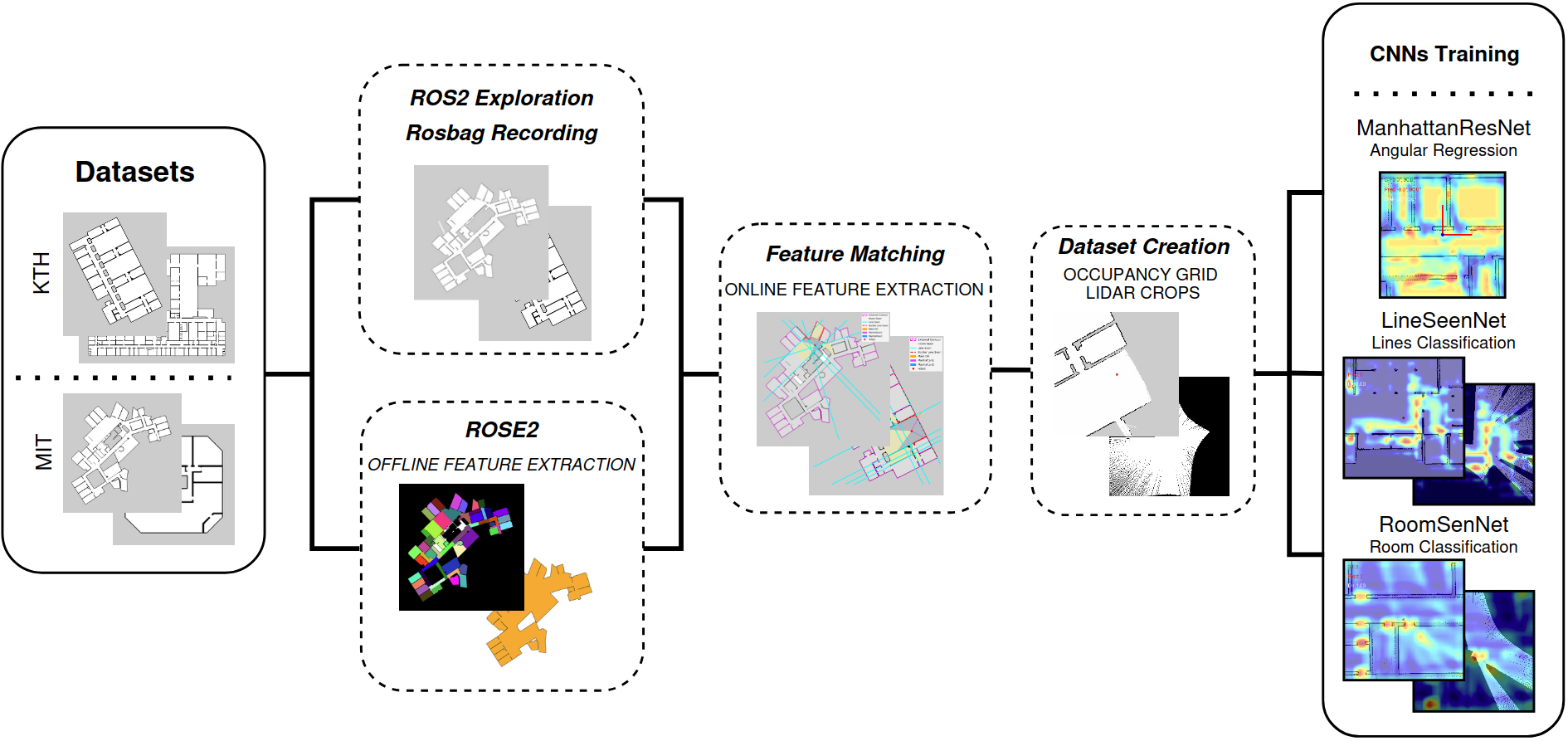

Traditional 2D SLAM provides reliable geometric maps but lacks human-like understanding of space. This work integrates semantic reasoning into occupancy grid maps to infer structural features—dominant directions (Manhattan), representative lines visibility, room visibility, and border proximity—via a complete pipeline: autonomous exploration, ROSE2-based feature extraction, online feature matching, and supervised CNNs for inference.

Key objectives

- Bridge geometry and semantics by enriching classical occupancy maps with interpretable structural cues.

- Automate dataset creation through autonomous exploration, offline feature extraction, and online matching.

- Deploy lightweight CNNs able to generalize structural reasoning across unseen indoor layouts.

System Overview

- Autonomous Exploration & SLAM – A TurtleBot3 equipped with frontier-based exploration and GMapping incrementally discovers the environment, guaranteeing broad coverage while logging robot poses and LiDAR scans.

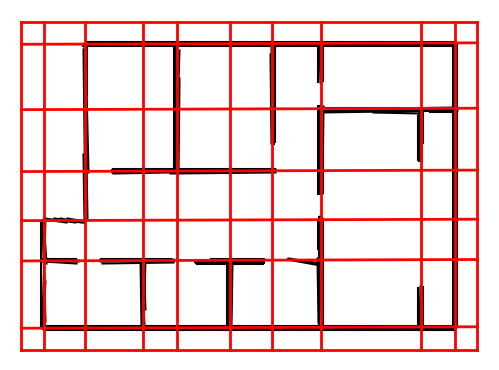

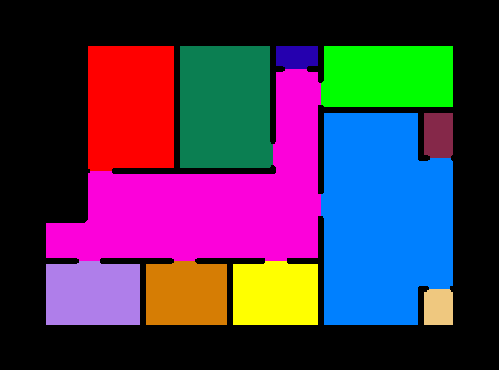

- ROSE2 Feature Extraction – Offline processing of the generated occupancy grids identifies Manhattan directions, representative lines, visible rooms, and borders, providing a structural abstraction of the map.

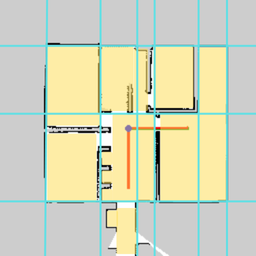

- Online Feature Matching – Simulated raycasting aligns each recorded pose with the extracted structural descriptors so that every local crop carries precise semantic labels.

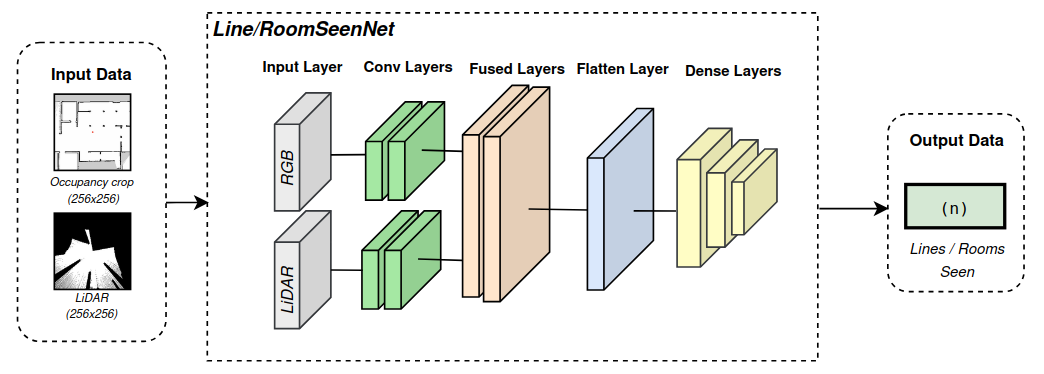

- Semantic Inference (CNNs) – Dedicated convolutional networks learn to predict structural properties directly from local map observations (and optional LiDAR projections), enabling semantic understanding at run time.

Semantic Features

- Manhattan directions: capture the two dominant orthogonal axes typical of man‑made indoor layouts.

- Lines visibility: counts representative structural lines visible from the robot point of view.

- Rooms visibility: estimates how many room cells are partially or fully observable, signaling openness or enclosure.

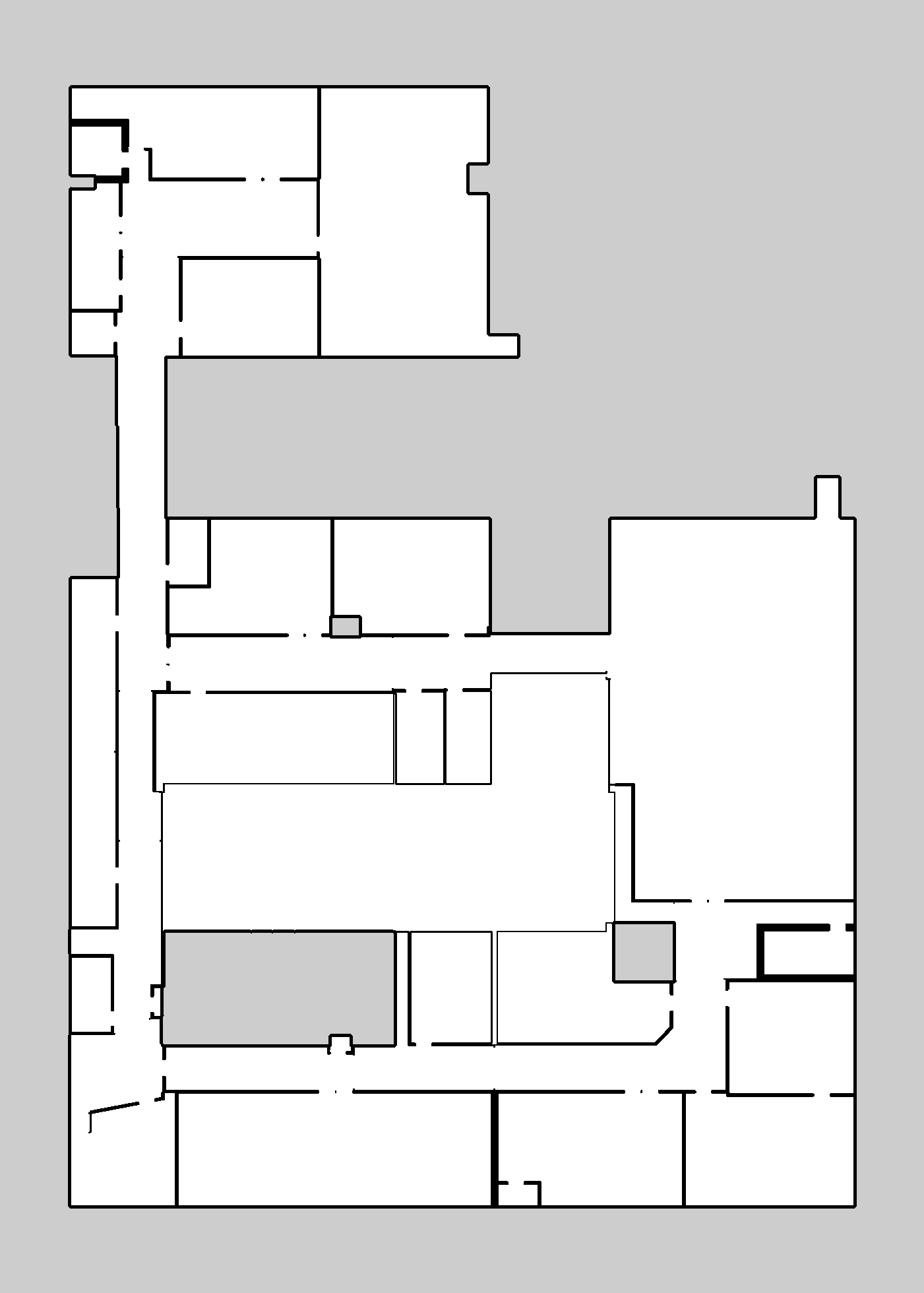

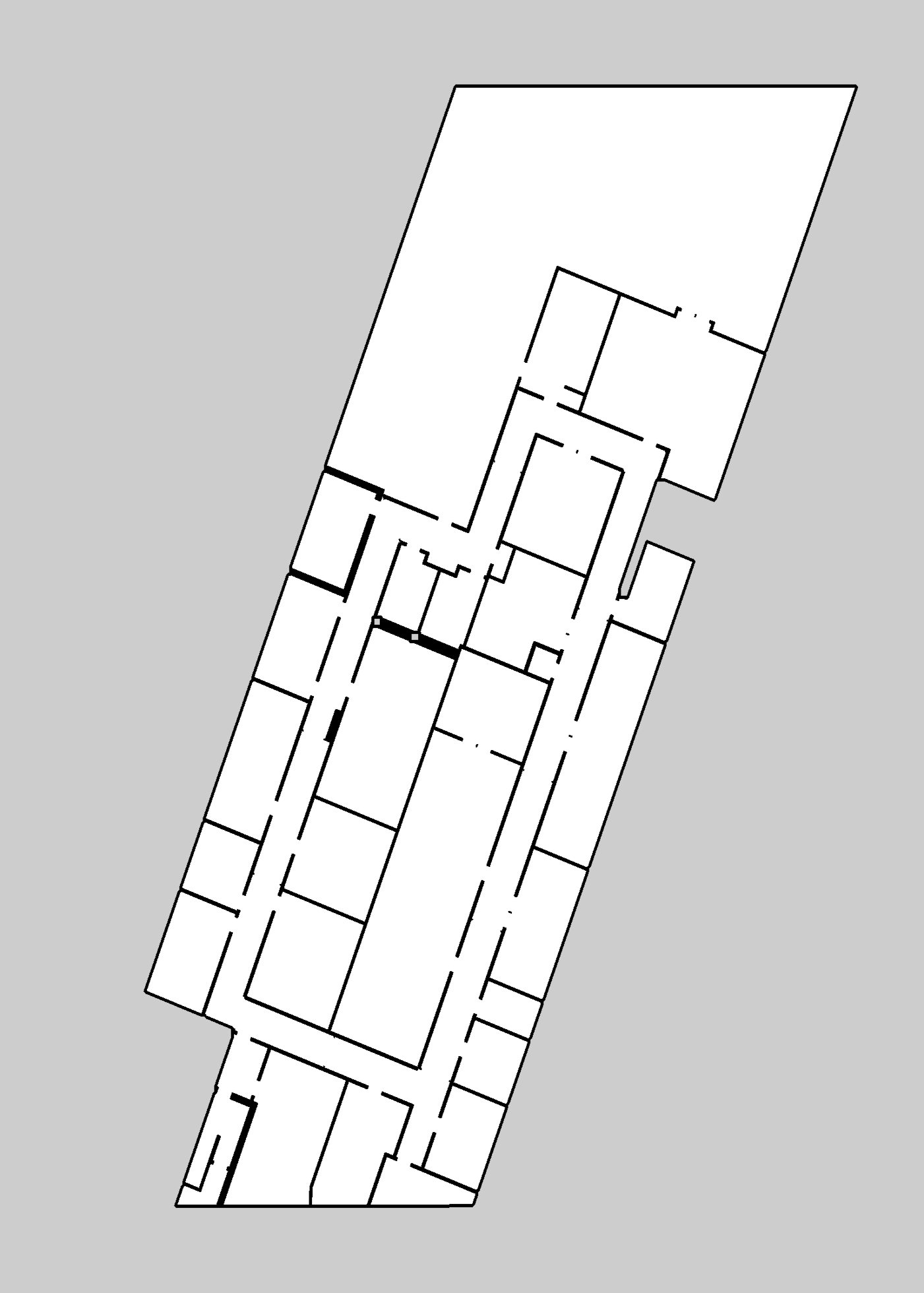

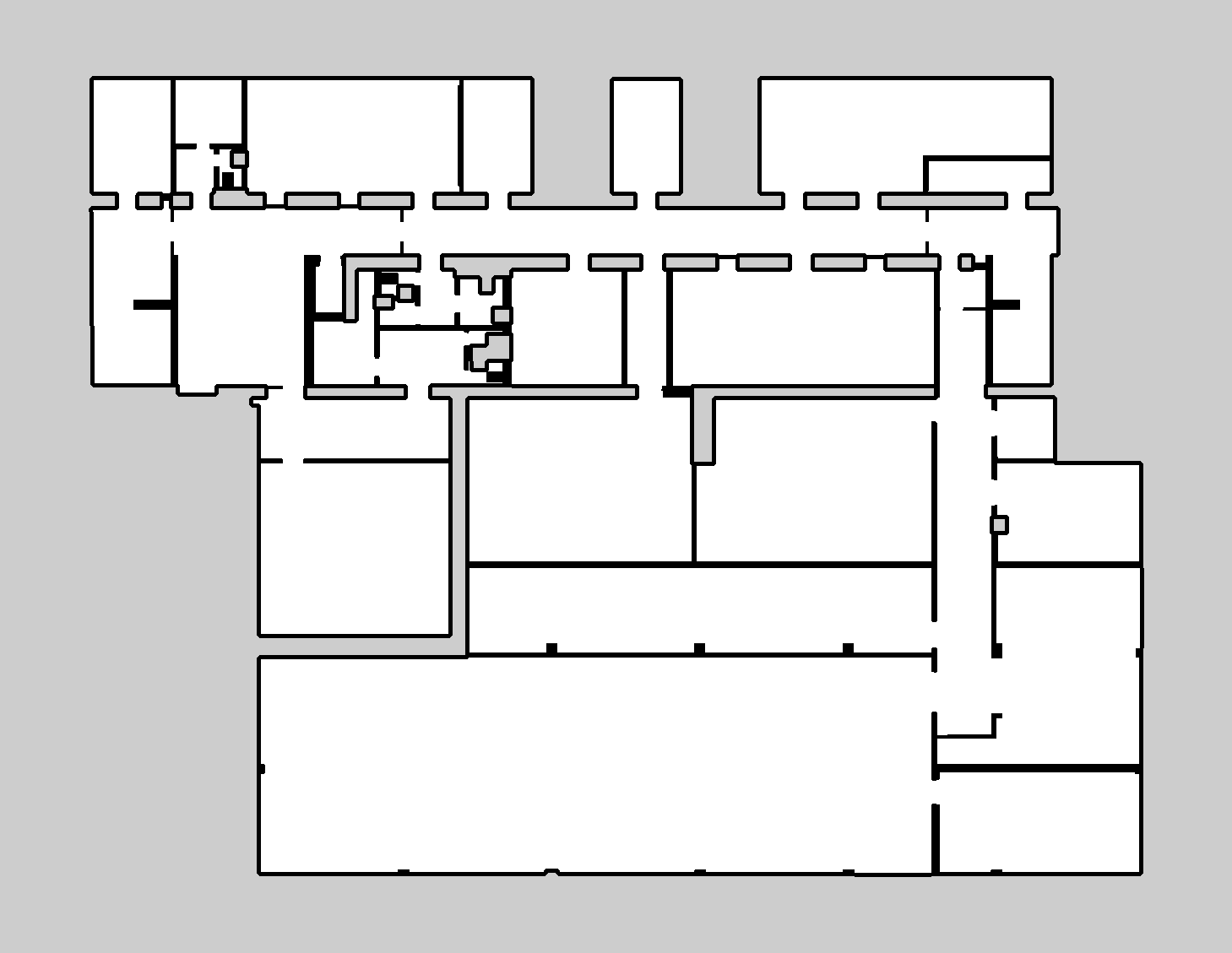

Datasets

Experiments on ~100 indoor maps from MIT and KTH (both Manhattan and non‑Manhattan layouts).

- Coverage: ~50 MIT + ~50 KTH maps spanning Manhattan and non-Manhattan structures.

- Sampling: 256×256 occupancy crops paired with LiDAR projections and semantic labels per pose.

- Splits: 70% training, 15% validation, 15% test at map level to avoid leakage.

Models

| Task | Input | Target | Formulation |

|---|---|---|---|

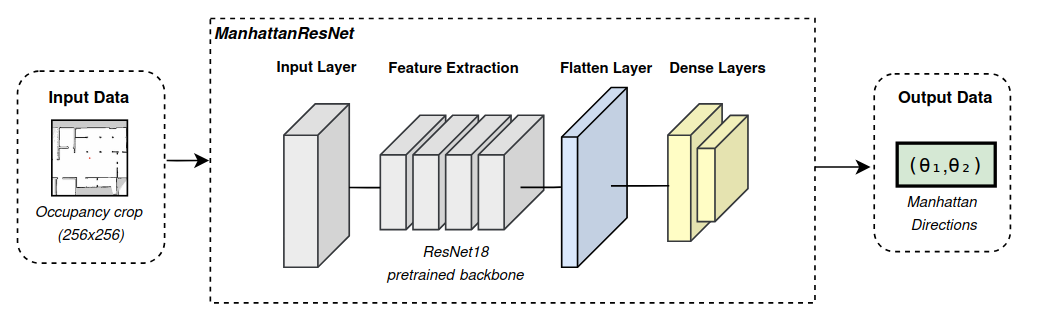

| Manhattan Directions | Occupancy crop (256×256) | Two dominant angles (°) | Regression (CNN) |

| Lines Visibility | Occupancy crop + LiDAR projection | Count of visible representative lines | Classification (CNN) |

| Rooms Visibility | Occupancy crop + LiDAR projection | Count of visible rooms | Classification (CNN) |

Model highlights

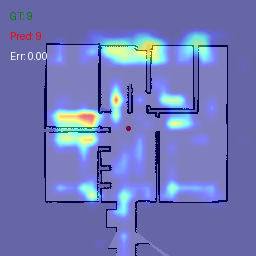

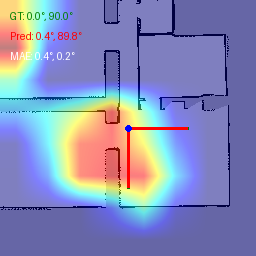

- ManhattanResNet leverages a ResNet18 backbone to regress the two dominant orientation angles with sub‑4° MAE on unseen maps.

- Fusion classifiers combine occupancy crops and synthetic LiDAR projections to robustly estimate the number of visible lines and rooms.

- Lightweight custom CNNs provide fast inference for deployments where GPU resources are limited.

Results & Performance

| Task | Best Model | Test MAE | Inference MAE (unseen maps) |

|---|---|---|---|

| Manhattan Directions | ResNet‑bb | 1.85° | 3.59° |

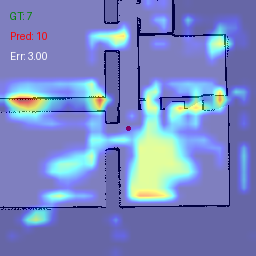

| Lines Visibility | Fusion (RGB + LiDAR) | 0.73 (test MAE) | 1.62 |

| Rooms Visibility | Fusion (RGB + LiDAR) | 0.69 (test MAE) | 1.04 |

Takeaways

- Robust orientation inference (MAE 1.85° on test, 3.59° on unseen maps).

- LiDAR fusion yields the best generalization for line and room visibility tasks (infer MAE 1.62 / 1.04).

- Performance remains stable when moving from curated datasets to unseen environments thanks to map-level splits.

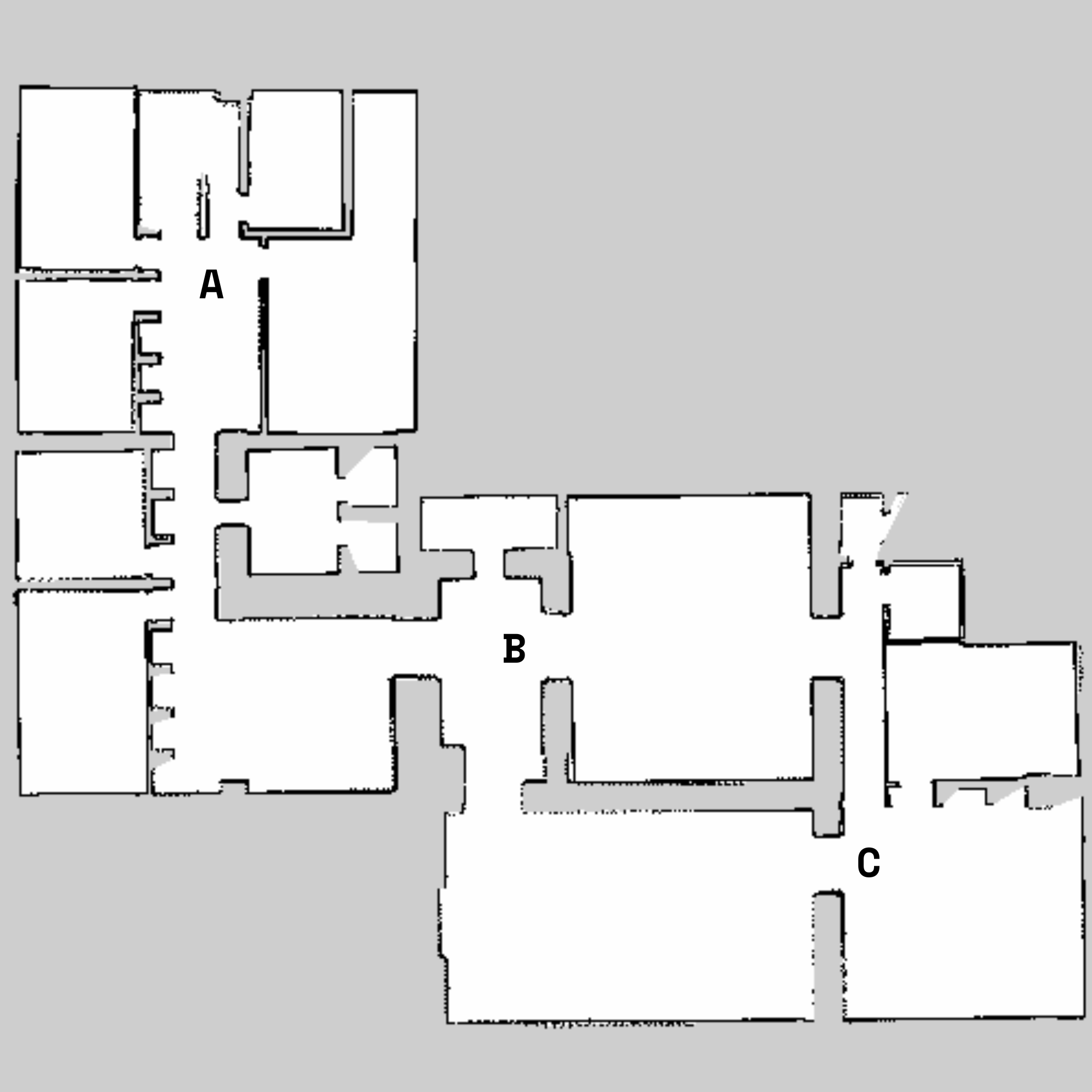

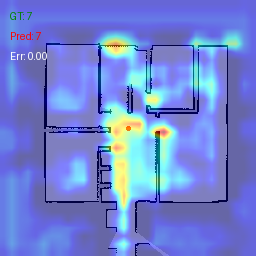

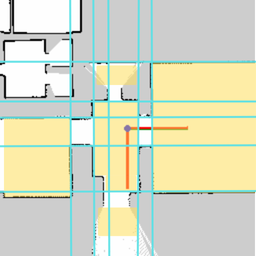

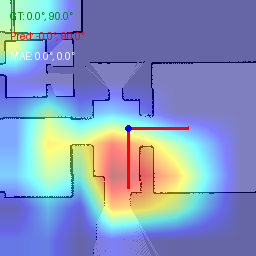

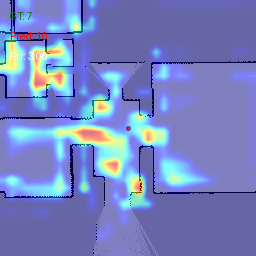

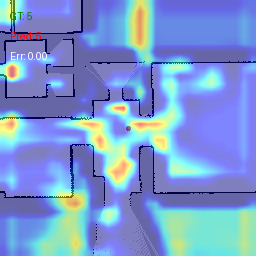

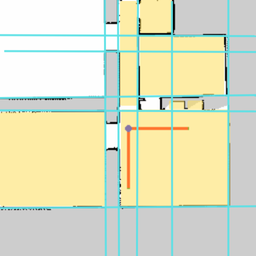

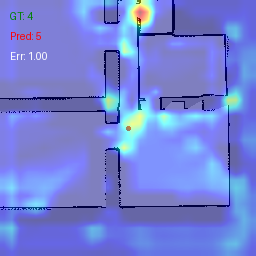

Qualitative examples (A, B, C): predicted features and Grad‑CAMs.

Implementation Highlights (ROS2)

- Automation workspace (tb3_stage_explore_ws) bundles:

- Stage + TurtleBot3 simulation scenarios with Nav2 navigation and recovery behaviours.

- Custom ROS2 Jazzy forks of ros2_gmapping e m-explore-ros2 for consistent map resolution and frontier selection.

- Docker orchestration that automates parallel exploration runs, rosbag logging, and map snapshots all in their own containers.

- ROSE2 + raycasting pipeline transforms raw logs into richly annotated datasets ready for supervised learning.

Future Work

- Broader datasets & clutter to stress-test generalization beyond clean academic maps.

- Unified multi-task backbones that share features across orientation, lines, and rooms inference.

- Real-time integration of semantic predictions into the robot decision-making loop.

- Extended semantics (e.g., doorways, corridors, navigability scores) for richer environment understanding.

Note: Full presentation slides and the complete thesis are linked in the downloads section at the top of this page. If you are interested feel free to explore all the content or reach me out for more details.