WebScraping SOA – Automated Attestation Harvester

Author: Gabriele Firriolo

Overview & Motivation

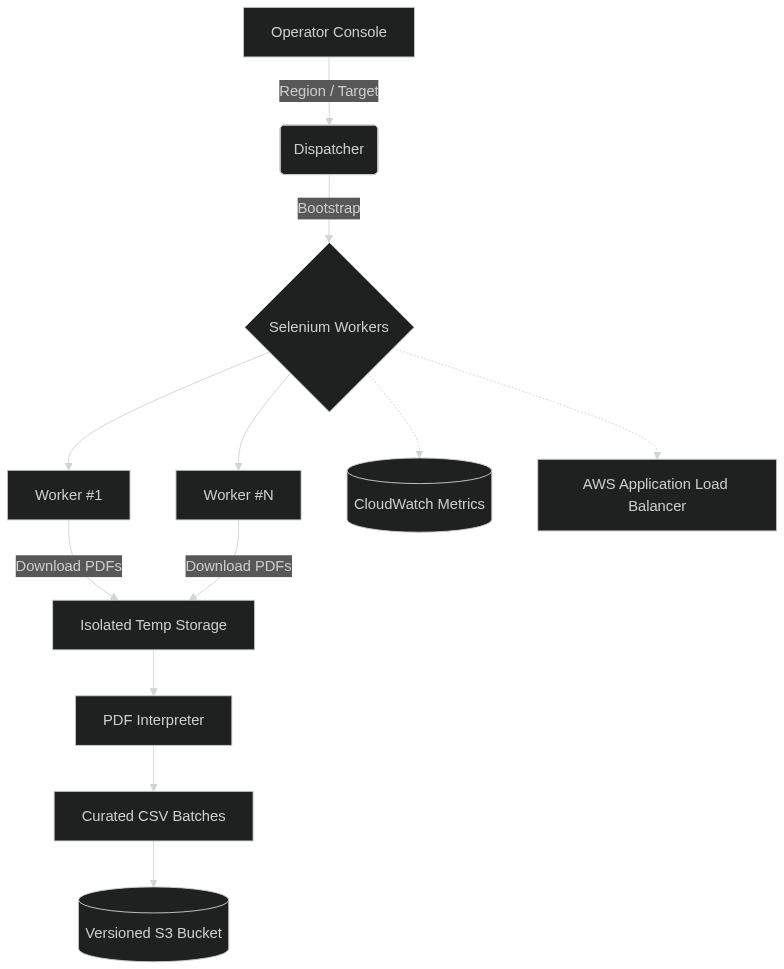

WebScraping SOA is a Python-driven platform that assembles public attestations at scale. A lightweight orchestration layer keeps operators focused on region-wide or company-specific audits, while the backend automates navigation, document download, PDF interpretation, and the creation of curated CSV batches for downstream analytics. The emphasis is on high throughput, full traceability, and compliance with the automation allowances exposed by the service.

Architecture Highlights

Acquisition Layer

- Selenium workers browse the dynamic single-page application exactly as a human would, ensuring every lazy-loaded panel is rendered before extraction.

- Rotating user agents and optional proxy pools distribute traffic across endpoints so no origin is saturated.

- Small randomized delays between actions keep the cadence respectful and align with the site's published automation policy.

Document Intelligence

- The PDF pipeline merges structured XFA parsing with table-reconstruction fallbacks to capture company metadata, certifications, and classification codes even when layouts drift.

- A normalization step turns the extracted payloads into tidy records that can be appended to regional CSV batches without manual cleaning.

Data Products & Observability

- Regional CSV exports are produced incrementally, supporting resumable campaigns without duplicate entries.

- Audit logs consolidate counts of visited companies, successful extractions, fallback paths, and elapsed time, offering a complete operational trail.

- Aggregated metrics feed dashboards so stakeholders can gauge progress and quality at a glance.

Browser Automation Strategy

- Why Selenium: the target portal renders critical information via dynamic JavaScript widgets; simple HTTP scraping would miss large portions of the dataset. Selenium guarantees full DOM execution and honours the site's automation interface.

- Compliance: the service explicitly authorises Selenium-based access for data portability. Credentials, consent, and rate limits are enforced inside the platform.

- Rotating proxies: long-running campaigns can route requests through vetted proxy pools to distribute load and respect geographic throttling rules.

- Politeness by design: deliberate pauses and capped concurrency keep request rates within the boundaries established by the site.

Cloud Footprint

- Containerised workers run on AWS Fargate behind an Application Load Balancer, enabling horizontal scaling without server maintenance.

- Autoscaling reacts to queue depth and CPU metrics, adding workers only when regional backlogs grow.

- CSV outputs land in versioned S3 buckets, while logs and telemetry stream into CloudWatch for monitoring and alerting.

- Secrets (proxy credentials, storage keys) stay in AWS Secrets Manager and are injected at runtime.

Orchestration Diagram

Resilience & Ethical Scraping

- Selenium exceptions, malformed PDFs, and recurring pop-ups trigger contained restarts so campaigns continue without supervision.

- Each processed company is flagged in region registers, enabling safe resumptions after pauses or maintenance windows.

- Respectful pacing and proxy diversity maintain long-term access by adhering to the norms communicated by the service.

Future Directions

- Broaden proxy health checks with automatic failover lists.

- Add anomaly-detection dashboards to highlight sudden shifts in attestation volumes.

- Explore lightweight headless browsers for low-volume spot checks while keeping Selenium for full campaigns.